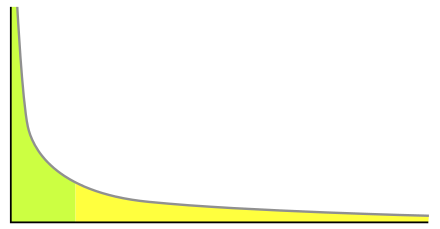

The long long tail of AI applications

My answer so far has always been: "No, bare LLMs are not going to compete with this product." I think that people are failing to understand the distinction between different classes of AI companies. I see it like this:

- Foundational AI - creating models like GPT-4 (text), Sora (video) etc.

- Applied AI - using existing models to create smart applications.

Note that there are also companies not focusing on AI - they will start lagging behind, let's forget about them ;)

There are orders of magnitude (!) more companies that will deal with Applied AI than Foundational AI, and they will be very busy for decades to come. Here's why:

- To get the most out of LLMs, you have to ask the right questions.

- LLMs have access to very limited context by default.

- LLMs are not AGI and agents need to programmed manually.

- LLMs don't understand specialized problems.

- Integrating AI in everything is a lot of work.

1 - To get the most out of LLMs, you have to ask the right questions.

In "The Hitchhikers Guide to the Galaxy", a bunch of philosophers decide to ask a computer the answer to "The Ultimate Question of Life, the Universe, and Everything". After eons of calculations the computer famously answers "42" . The lesson is that we have to ask the right questions to get good answers. How does this relate to LLMs? Currently ChatGPT has an easy time looking intelligent because we are driving the conversation and we are responding pretty intelligently in the dialog with the LLM. A large part of the intelligent conversations with ChatGPT are due to the intelligence of the human.

When applying LLMs to a product, we have to be very clear about what answer we want answered, for all users of that product. This is difficult, and will require quite some brain power of the engineers at Applied AI companies.

2 - LLMs have access to very limited context by default

There's a whole world of knowledge in your head whenever you are working in a product. Assume you work in HR and are trying to process a contract change with one of your employees and you want a review of your proposal. Instead of asking the HR manager, you decide to first ask ChatGPT. When you start typing the question into ChatGPT, you will quickly notice you'll need to give it a lot of context for it to give a correct response:

- What was the contract before and why are we making this change?

- In which country are you located and are there any recent/upcoming regulations that might make this contract change problematic from a legal perspective?

- Is this change OK standard company HR policy or is it an exception?

- How will this contract change affect the performance/output/tenure of the employee?

- How fair is this change towards the rest of the organization? How likely is the employee to discuss this with others and what are the possible effects of this?

A lot of this context will be present in the heads of the HR manager you'd normally ask. If we want LLMs to give an answer without all the manual context copy/pasting, we need Agents to pass the right context to it.

3 - LLMs are not AGI and agents need to programmed manually.

Large Language Models are not Artificial General Intelligence. Listen to some Lex Fridman podcasts with Yann LeCun or Sam Altman if you need convincing on this one. LLMs are pretty limited in their abilities and "just" spit out text based on context. Within that capability they are already incredibly useful, but this is only the start. If we do some clever prompt engineering and we allow their response to issue commands, we can set up an Agent structure to perform tasks. One of the most straightforward agent structures is ChatGPT where it acts as a chat assistant to a user. Others can perform Retrieval Augmented Generation (RAG) to dynamically fetch external information into context.

Think of the LLM as the book-smart intern that will be handling questions, they are able to give text-book answers based on their information about the world at large. Think of the agent structure as the manager that gives instructions, checks the answers etc. To get the most out of the intern we need to give it the right instructions, gives it the right context and permissions to look up information and finally check the answers. For the foreseeable future, all this agent logic has to be programmed manually for the specific use-case you want to use it for. Read: quite a lot of work. The good news is that you can expect a TON of improvements and out of the box integration in this space over the next couple of years, but an LLM without an agent structure is not going to be very useful for most products and hard work will be required.

4 - LLM's dont understand specialized problems

LLMs are trained on public information on the internet, and English currently dominates there. There is a lot more non-public information and a lot more non-English content.

One of my friends (hi Fedor!) has a company that applies AI to speed up tender management. Their software scans the documents with details about the tender, and teams can collaborate to come back with a bid. They apply AI to find information quicker and answer questions. This is a very specialized expertise where one line on page 85 of document 14 might be critical to the viability of the bid. There's a ton of very specific terms that normal humans will have never heard of. What's more, the languages and rules vary wildly by country. We can not expect a generic LLM to give expert level answers in this context.

5 - Integrating AI in everything is a lot of work.

We've seen that thinking about the right questions is a lot of work. We've seen that getting the right context and prompts is a lot of work. We've seen that creating Agents is a lot of work. If we do all of these it gives us AI-power in one part of our product. So if we imagine a product that wants a simple "smart input field" that supports AI powered auto-complete, we have now built one "smart input field". Unfortunately your product still has 100 dumb input fields. You'll need to think about how to make each of those smart. Right now each of those 100 fields will require manual work, as you'll have to think about what specific context the LLM should get for that place in the UI, who is able to get what context into the autocomplete functionality (e.g. an admin prompt might get different context than a guest user). Making your whole application smart will take a lot of effort.

Years ago only laptops had wifi. Then came the smartphones, then the TVs, then the thermostats, then the washing machines, then the doorbells, and we're not nearly near the end.

For AI there's a similarly long tail of applications where AI can add value, and we'll be working on incorporating them many many years. It's an incredible time to be in software engineering!

thoughts on #5. Makes me wonder if there are enough architects today or with "enough stuff" to make this anything but a "decade of crappy work" headed our way.. My take? Offload by designing all apps to be more more configurable, let the ecosystem build it out. Leadership needs to note the importance of extensibility - form around that idea, or watch your app be replaced. https://medium.com/@penaname/integrating-ai-youre-not-doing-something-right-bba69a6ea455

ReplyDeleteyour insight is helpful.

ReplyDelete