[TSTIL] Certinator

[ This is a part of "The Software That I Love", a series of posts about Software that I created or had a small part in ]

2015 - Certinator

My brain is different from that of most people. This is a bit exaggerated, but I either understand something completely or I'm very confused. A coworker recently said that I somehow "know what I don't know". This is a blessing and a curse. A blessing because when I get it I am able to see bugs or solutions in the blink of an eye. A curse because I'm kind of useless and doubt everything until I understand a topic 100%.

Both `git` and SSL/TLS were topics that I didn't get for a long time. When I learned the git commands I was useless, but once I learned the data structures I became a git expert overnight. It's a beautiful idea brilliantly executed. SSL/TLS was the same.

In the Mendix Cloud, customers needed to add custom domains for their apps. As we were enterprisey, this had to be HTTPS but no low-code developers understood how that worked. Once I "got it" I was able to help them out and debug things quickly. I built a small tool "certinator" for this purpose. If you pasted your certificate chain it gave you feedback or autocompleted it. The tool never got far, but building it was a nice journey.

- Certinator needed to autocomplete intermediate certificates.

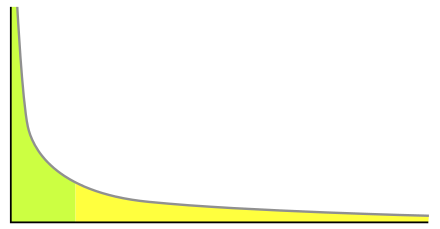

- For that I wanted to find all common intermediate certificates in the world.

- For that I needed to connect to millions of popular websites and look at their certificate chain.

- For that I needed to find a list of millions of websites.

- For that I could use the CommonCrawl dataset and extract URLs.

- For that I set up a huge EC2 instance in the same region as the CommonCrawl data. I optimized and processed 10TB of data on it within a couple of hours.

- Then I connected to all domains and collected the certificate chain with a tiny `golang` program.

Watching the machine crunch away the data on 32 cores, and saturating the 10GBps network connection for hours on end was really incredible.

When it was done, I created this blog post: https://blog.waleson.com/2016/01/parsing-10tb-of-metadata-26m-domains.html . I posted it with a rather clickbaity title on Hacker News and got to #1 for a couple of hours. My blog post got about 50k hits in one day. That was cool.

We used certinator within Mendix every now and then to debug some certificate issues. My friend Sebastian in the CloudOps team took over that part of the job and I think he used the tool for a couple of years. You can find the source code here: https://github.com/jtwaleson/certinator

A couple of years later I got a message from a startup in San Francisco. They wanted to extract data from the CommonCrawl archives too and had seen my blog post. I was excited and offered to build it for about 3k. It would probably take two days to build, max. They accepted right away, so I was probably too cheap ;) Extracting their data was much more compute intensive, so I went with an ec2 spot-instance fleet. Running the entire archive took a couple of hours with about 100 large machines. It was incredible to see. If you want something like this, call me anytime because I enjoy this kind of work.

previous: 2015 - InstaDeploy

Comments

Post a Comment